Screencast Transcript: Improving Webpack Build Times

Posted by Matt Surabian

On February 15, 2017 we had a screencast to talk about how to improve webpack build times by utilizing the new webpack HardSource plugin created by our colleague Z Goddard. This post contains the video of that event along with a transcript and visual aids.

If you’re interested in learning more about webpack, check out our upcoming event list!

Laura: Hey everyone, my name is Laura. Welcome to our screencast today on webpack and the HardSource plugin that my colleague Z Goddard has helped create. I want to say thanks so much for coming and we’re really excited to get started. I’m going to introduce my colleague Matt Surabian the Director of Web Apps to take it away. You can ask questions in chat and I believe Matt has posted a poll but he’ll go over that. Thanks so much!

Matt: Hi. I think everyone can hear me. As Laura mentioned I’m Matt Surabian the Director of Web Applications at Bocoup, my colleague Z Goddard is here as well and we want to talk today about webpack and the HardSource plugin and how you can improve your build time.

Matt: Hi. I think everyone can hear me. As Laura mentioned I’m Matt Surabian the Director of Web Applications at Bocoup, my colleague Z Goddard is here as well and we want to talk today about webpack and the HardSource plugin and how you can improve your build time.

For about 5 or 10 minutes I’ll show you how it’s integrated into a project and we can talk about a few gotchas you should know about if adopting it and the rest of the time we really want to leave open for ya’ll to ask questions so we can help you use this stuff.

To that end on the right hand side of your screen there’s a polls tab with three questions there that will help us get to know how familiar you are with webpack and HardSource today that will help guide our content. If you could take a second to answer those questions that would be great. There is also a questions tab; even if you don’t have a question to ask please keep monitoring that tab because you’ll be able to see everybody else’s questions and up vote them or downvote them and that will help us prioritize them depending on how many we get.

I’ll get started by saying we’re going to be recording this so if you want to come back and watch a replay you’ll be able to do that and if you haven’t registered ahead of time we’ll be putting the video on youtube and writing a blog post so you can share that with folks.

I’ll get started by saying we’re going to be recording this so if you want to come back and watch a replay you’ll be able to do that and if you haven’t registered ahead of time we’ll be putting the video on youtube and writing a blog post so you can share that with folks.

To start I’m going to share my screen (sorry for the poor video replay quality!). At a high level it looks like pretty much everybody knows what webpack is based on the poll, but I’ll take a minute just to recap for anyone watching this that isn’t sure. webpack is a front end build tool and it’s decided to answer the question: “why should our build tool only be bundling JavaScript files”. We want to be able to write code that actually catalogs all of our module’s dependencies so you can actually write something like this:

/**

* MyGreatModule

*/

require('img/asset.png');

require('theme.stylus');

class MyGreatModule {

constructor(options) {

// Some awesome setup

}

}

That’s really powerful not just because we can have webpack take over where something like Gulp or Grunt would need to do CSS preprocessing or anything like that, but also because webpack gives us all the tools we need to assess our bundle and chunk it out so the user is only required to download and consume exactly what they need to do what they are trying to do in your application. Even though most projects don’t get all the way into webpack code splitting that’s really its promise. The improved analyzation and support of that critical path. Think about if you’re writing a game with 20 levels. You don’t want someone who is coming to the game for the first time and playing level 1 to need to wait to download all the assets for levels 2 through 20. Or if you have a large application with many views you can deliver those views and the code that implements them on demand as the user accesses those parts of your application. The trouble is that as we ask webpack to go beyond the land of concatenation, minification, things that requirejs and browserify used to do for us– at least for me, before I was using webpack that’s what I was using– yeah webpack can do all those things and any other “ation” you might need: transpilation, etc. The point is the more things you ask it to do, the longer it needs to do those things, and if we were only cutting builds once a day or something like that to ship to production maybe that wouldn’t matter. But now we’re writing code like this:

require('img/asset.png');

require('theme.stylus');

And this code isn’t strictly valid without webpack, right? What does it mean to require theme.stylus? We need webpack to actually do active development and that can mean that we have to wait around a lot. Webpack has this multi-stage build process and so what my colleague Z’s plugin does is use the hooks that webpack gives us to dive in and cache those intermediate stages of a module so that webpack doesn’t have to go all the way out to a file system to resolve it, and do all its processing on it. This is great especially for something like a third party dependency that hasn’t changed since you brought it into your application. Or if you have a particularly large app and you make some small change like adding a comma or inserting a semicolon. You don’t want to have to sit around and wait for every single third party dependency to rebuild every time you make a change. Z and I can answer more technical questions about exactly how this works. I have the plugin code here, but I’m not sure exactly how much yall want to get into that. We can definitely do that later so please ask those questions if you’re curious how it works under the hood.

Most of what I want to show is how webpack can get brought into a project. This here is my DuckHunt game.

Pretty simplified replication of the NES game. It used to be using Browserify but now it’s using webpack. It has a big third party dependency: the pixijs rendering engine that handles putting this in canvas or WebGL depending on the consumer.

The webpack config itself is relatively small:

'use strict';

var path = require('path');

var webpack = require('webpack');

var HardSourcePlugin = require('hard-source-webpack-plugin');

module.exports = {

context: __dirname,

entry: {

duckhunt: './main.js',

},

output: {

path: path.join(__dirname, 'dist'),

filename: '[name].js',

},

devtool: 'source-map',

module: {

loaders: [

{

test: /\.js$/,

exclude: /node_modules/,

loader: 'babel-loader?cacheDirectory',

options: { presets: ['es2015'] },

},

{

test: /\.png$/,

loader: 'file-loader',

},

{

test: /\.json$/,

loader: 'json-loader',

},

{

test: /\.(mp3|ogg)$/,

loader: 'file-loader',

}

]

},

resolve: {

modules: ['node_modules'],

extensions: ['.js', '.min.js'],

},

devServer: {

contentBase: path.join(__dirname, "dist"),

compress: true,

inline: true,

port: 8080

},

plugins: []

};

I want to transpile my ES2015 code into ES5 so I’ve got babel-loader in there. Right now, I’m not actually using it to load my png’s but I want to be doing that. I’m using it to load some JSON data as well. I’m not yet using it to load my audio files, but I want to be doing that too. Still, my build kinda takes a while considering it’s such a small project. Usually my build is about 5 seconds. Let’s see how long we get for this run. Ooo, this one’s even longer. Maybe because I’m screen sharing. That was 12 seconds.

> DuckHunt-JS@3.1.0 build /home/matt/code/DuckHunt-JS

> webpack

Hash: a5454be00ca64ea62a6b

Version: webpack 2.2.1

Time: 12051ms

Asset Size Chunks Chunk Names

duckhunt.js 2.26 MB 0 [emitted] [big] duckhunt

duckhunt.js.map 2.8 MB 0 [emitted] duckhunt

+ 606 hidden modules

Kinda rough. Especially considering I’m not even doing anything with images or audio. If I put back in the HardSource plugin, and I’m kind of cheating here, because I already have an established cache directory here since I’ve been using HardSource on this project.

'use strict';

var path = require('path');

var webpack = require('webpack');

var HardSourcePlugin = require('hard-source-webpack-plugin');

module.exports = {

context: __dirname,

entry: {

duckhunt: './main.js',

},

output: {

path: path.join(__dirname, 'dist'),

filename: '[name].js',

},

devtool: 'source-map',

module: {

loaders: [

{

test: /\.js$/,

exclude: /node_modules/,

loader: 'babel-loader?cacheDirectory',

options: { presets: ['es2015'] },

},

{

test: /\.png$/,

loader: 'file-loader',

},

{

test: /\.json$/,

loader: 'json-loader',

},

{

test: /\.(mp3|ogg)$/,

loader: 'file-loader',

}

]

},

resolve: {

modules: ['node_modules'],

extensions: ['.js', '.min.js'],

},

devServer: {

contentBase: path.join(__dirname, "dist"),

compress: true,

inline: true,

port: 8080

},

plugins: [

new HardSourcePlugin({

cacheDirectory: path.join(__dirname, 'node_modules/.cache/hardsource/[confighash]'),

recordsPath: path.join(__dirname, 'node_modules/.cache/hardsource/[confighash]/records.json'),

configHash: function(webpackConfig) {

return require('node-object-hash')().hash(webpackConfig);

}

})

],

};

This’ll go through and use that cache, it’ll still take a little time, but you can see that’s significantly less, that’s a little less than half the time. This one only took 5 seconds.

Time: 5012ms

Asset Size Chunks Chunk Names

duckhunt.js 2.26 MB 0 [emitted] [big] duckhunt

duckhunt.js.map 2.8 MB 0 [emitted] duckhunt

+ 606 hidden modules

Now if I were to change something in my code, let’s say I wanted to add a new state to the dog object. It’s going to rebuild that file because it has changed but it’s going to use the cached version of everything else. It’s not going to blow away my entire webpack HardSource cache that it’s using. So you can see the modules it just ended up rebuilding.

Time: 6127ms

Asset Size Chunks Chunk Names

duckhunt.js 2.26 MB 0 [emitted] [big] duckhunt

duckhunt.js.map 2.8 MB 0 [emitted] duckhunt

[274] ./src/modules/Dog.js 9.2 kB {0} [built]

+ 605 hidden modules

It’s just this one that has changed since the last time it populated the cache. It added a little bit of time to do that [full run in about 6 seconds], but it’s still not 12 seconds it’s significantly less than that.

This is the most simple configuration that I’ve found to use with HardSource.

new HardSourcePlugin({

cacheDirectory: path.join(__dirname, 'node_modules/.cache/hardsource/[confighash]'),

recordsPath: path.join(__dirname, 'node_modules/.cache/hardsource/[confighash]/records.json'),

configHash: function(webpackConfig) {

return require('node-object-hash')().hash(webpackConfig);

}

})

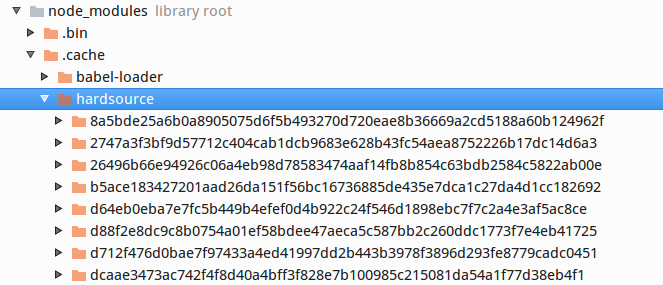

It needs a cacheDirectory and that’s where it should store its cache data, here’s what that looks like:

It can be anywhere. I like to put it in node_modules/.cache/hardsource, but this is user defined. It should be absolute. It will accept relative paths, but more and more things in webpack really want absolute paths so it’s a good idea to use path.join here. The recordsPath is something that may already be set depending on your project. What this does is this let’s webpack keep the same IDs and store in a persistent way the ID of your application’s modules that webpack assigns to them. The configHash property here is what determines how the cache directories are named. This is just running an object hash on my webpack config which is a javascript object. That’s going to make sure that, let’s say I have multiple webpack configs or I change this config to add a different loader that’s going to make sure that a new cache is created. This kinda brings up one of the gotchas with this plugin. That’s that by default it really doesn’t know where to put your stuff. If I didn’t have this property here it would store everything inside this directory but it wouldn’t know when to bust, when to make a new cache, so giving it some knowledge about that is going to help distinguish these things.

The other thing that HardSource has under the hood is a concept of your environment. This is what the default environment hash looks like:

environmentHash: {

root: process.cwd(),

directories: ['node_modules'],

files: ['package.json'],

},

It takes your root directory, watches node_modules and watches your package.json. What we’re trying to do by default is support the npm ecosystem. So if you add a new node_module we’re assuming there are going to be big changes we need to make a new cache for. If you’re updating an existing thing in node_modules or changing a version we want to be able to bust your cache for that too. You’ll notice though this isn’t purging anything. And that’s one of the things that you should keep in mind. If you have a really large project with a really large build artifact these cache directories can build up on your machine and take up space so it’s a good idea to go through and clean them out. There’s an open issue right now where we want to look at trying to figure out a property that might be able to help with that logic so that we could do some purging but it’s a little bit complicated because we obviously don’t want to purge things that the user might need. So right now that’s a task that’s left to the folks who adopt this plugin to stay on top of.

The other thing that you should be aware of when using the webpack HardSource plugin is that it doesn’t always play well with other plugins. It seems to work well with the main things that we use often. But that’s not to say that it’s going to play well with every single plugin. The issues section on GitHub is a good place to look to see what’s going on. The only thing that I’m aware of right now is a change that was recently made to the offline plugin and that caused some inter-op problems that seem to be offline plugin related. There’s an open issue about that. The previous version of webpack dev server, which just released a new version about a week ago, had a previous version that was broken and didn’t work with HardSource for some reason. The current version of webpack dev-server works appropriately. It’s kind of hard to track these thing down. If you notice this not working with a plugin that you have on your project please open an issue and let us know. The only other thing that you should really be aware of when using HardSource is using loaders or using dependencies that when bundled aren’t done in a deterministic way. So in other words if you have some loader that changes files and it’s not always going to output the exact same bundled version of that file it’s going to change that every single time it runs through even if the file doesn’t change. HardSource isn’t going to be able to cache that. That’s something to be aware of. The majority of loaders are deterministic and are cachable but because the webpack ecosystem is so large it’s possible that there are things out there that won’t work but so far we’ve done a good job of trying to find those things and track down what makes it not work. Definitely checkout the issues list to see if there’s anything right now that you may be using that doesn’t work. If you run into any problems outside of that, a good place to check is StackOverflow. We try to be active on there answering questions.

Let me show you, before I turn it over to more broad questions, what the adding of HardSource looked like in this project.

It really was adding these two dependencies: hardsource and then node-object-hash which is what we’re using right here in the config to name our configHash so that it knows when to bust its cache.

configHash: function(webpackConfig) {

return require('node-object-hash')().hash(webpackConfig);

}

And that’s it. You can see here how when I first integrated the HardSource plugin I did it with relative paths, but that’s ok. We added the HardSource plugin and required it and that’s it. I’m going to stop sharing my screen now and let’s check back in to see what kind of questions we have coming in.

Andrew wants to know:

What is the difference between this and webpack’s existing cache option?

Z: I can speak to that a little. One part is that there isn’t strictly a difference there are parts of HardSource where it makes use of what the cache option does. What the cache option does is creates a memory store which keeps copies of your built modules and then when it does a rebuild with webpack-dev-server or webpack –watch it builds a reference for a module, looks inside the cache to see if it already exists and if possible reuses it. HardSource works similarly in that it creates those references so that there’s less work that needs to be done hitting the file system, but also that it prefills that cache where possible so that provides a lot of performance. Also the versions that are put in that cache are prebuilt.

Matt: Yeah, it’s really like every step of the process and not just that initial file system hit that HardSource is trying to optimize for. The file system hit is very real. It takes a long time to resolve your dependencies especially if it’s not defined in code with an absolute path, and it takes a lot of time to get module data but all the other pieces that go along with it inside webpack to build up your bundled assets is what we’re trying to optimize for. I think there’s a push happening to get HardSource into webpack core. Is that right?

Z: Yeah there’s a lot of desire for it because it would be really great to have it as a first party effort. Now that webpack2 is out that’s going to become a much bigger reality. Likely in the future webpack major version change. Cause the webpack core team is very much planning on having more regular updates so that’ll make it more capable since HardSource was first released in September of 2016 and that was still prime time for webpack2’s main development. It could have gone in then but with all the other changes webpack2 was making, it’s more of a reality now. The main path is like, figuring out what in HardSource we can generalize so it’s more available to the community to interact with on a deeper level.

Matt: And just to say explicitly, HardSource does work with webpack2 and webpack1. It’s just not built into the core of either of them. Yet. Well I guess it’ll never be built into webpack1, that ship has sailed.

Z: Yes.

Are there any other questions about how this works under the hood or how to integrate it into your project? The integration is meant to be pretty simple. The README goes through each specific piece of the plugin config options. We can kind of review those at a high level and talk about what they are.

new HardSourceWebpackPlugin({

// Either an absolute path or relative to output.path.

cacheDirectory: 'path/to/cache/[confighash]',

// Either an absolute path or relative to output.path. Sets webpack's

// recordsPath if not already set.

recordsPath: 'path/to/cache/[confighash]/records.json',

// Optional field. Either a string value or function that returns a

// string value.

configHash: function(webpackConfig) {

// Build a string value used by HardSource to determine which cache to

// use if [confighash] is in cacheDirectory or if the cache should be

// replaced if [confighash] does not appear in cacheDirectory.

//

// node-object-hash on npm can be used to build this.

return require('node-object-hash')().hash(webpackConfig);

},

// Optional field. This field determines when to throw away the whole

// cache if for example npm modules were updated.

environmentHash: {

root: process.cwd(),

directories: ['node_modules'],

files: ['package.json'],

},

// `environmentHash` can also be a function. that can return a function

// resolving to a hashed value of the dependency environment.

environmentHash: function() {

// Return a string or a promise resolving to a string of a hash of the

return new Promise(function(resolve, reject) {

require('fs').readFile(__dirname + '/yarn.lock', function(err, src) {

if (err) {return reject(err);}

resolve(

require('crypto').createHash('md5').update(src).digest('hex')

);

});

});

},

}),

Again to remind you, the most minimal thing that you need to provide is: a cacheDirectory, a recordsPath if you’re not already using this- if this is set elsewhere in your webpack config then you don’t need to specify it here, and some kind of configHash. The option here you could use is a process environment variable or something to name your folders, the trouble there is then you’re going to have a bunch of things in your cache that might not be right (in projects with multiple webpack config) which is why we recommend hashing your webpack config. We recommend that the environmentHash property is where you take care of the other busting logic and that will update any existing things in your cache automatically for you without necessarily creating a whole new cache folder. You can have a custom environmentHash function that can look at other things you may have in your project- like a yarn file- or anything else that don’t necessarily directly impact package.json or your node_modules directory (like a vendor directory).

Sounded like we might have had another question come through:

How do you think this would interact with the webpack DLL plugin?

Matt: Z and I were just talking about this this morning. Do you want to talk in a little more detail about how that might work? I know the idea is that with the DLL plugin we could have a dedicated implementation of HardSource to better take advantage of what HardSource is doing. Do you want to talk more about how that works right now?

Z: Sure! Right now, there’s a change we made in December to HardSource to support the DLL plugin. We’re not able to take full advantage of DLL plugins but HardSource plus DLL will still be faster than just DLL. The change that was needed (in HardSource) was: if you beforehand depended on a dependency inside your DLL module it would have to rebuild that every time, but now HardSource is able to be smarter about that and only rebuild when either – well specifically your module changes. So if you depend on a DLL dependency it’s fine, cache works. DLL is still able to give you a lot of performance as well that wouldn’t get with HardSource alone. In the future what we want is a plugin dependency of HardSource. The trouble right now is that DLL has internal knowledge that HardSource cannot access. So HardSource needs to expose an interface where a DLL Plugin and HardSource DLL Plugin; the HardSource DLL Plugin would be given that specific knowledge that DLL plugin has so it can more deeply integrate and provide even better performance while also being smart about that and not getting in your way.

When might I need to provide the environmentHash config option?

Matt: What I would say to that is: if you’re using yarn you should look at the function that is provided inside the readme of the current hardsource version because by default HardSource is only going to look at node_modules and package.json for changes. The other time you might want to do it is if you are vendoring dependencies. As in: actually have a vendor folder where you’re storing dependencies that you need cached. Specifying that inside, or maybe better to say extending the default config to include the vendor directory

environmentHash: {

root: process.cwd(),

directories: ['node_modules', 'vendor'],

files: ['package.json'],

},

That way any of the scripts you’re including in that vendor directory will be cached. I should say if you don’t do this, the only consequence is that you’ll have to wait for those things to be processed by webpack, it’s not going to break HardSource or anything like that.

Sounds like the best way to use this is npm run dev –enable-cache is there any use for this in QA or Prod?

Matt: I think the only thing I would say there is that it depends on how long your build time is. If you have, say, CI run and that’s taking you 20 or thirty seconds to get feedback your CI box might do well to have a cache available. But prod build, it really depends [on your deployment pipeline]. If you don’t mind waiting, fine. But it’s certainly true that the biggest impact we’ve seen is on active development, and it does work with webpack dev-server.

Does the webpack build benefit from using this plugin if I’m using webpack’s watch and keepalive configs and I touch a file? Or is this only beneficial between launches of webpack?

Matt: Correct me if I’m wrong Z, but as long as your current webpack process has knowledge of HardSource, so your config has been modified to use HardSource. It should play well with watch, it should play well with dev-server. So it detects a change in a file then it should be using your cache for anything that hasn’t changed still. So you shouldn’t have any issues using watch or keepalive configs along with webpack HardSource you should still get the benefit.

Z: Yeah I can add to that a little. In part that has to do with other options (in webpack) there are some things you can still get performance benefits with that are less obvious. HardSource supports sourceMaps. If you’re using say, eval-source-maps for your dev environment so that each module internally stores the source map instead of a larger source map which is the normal recommendation for development use, HardSource provides a benefit there because by it’s name it’s literally keeping a hardened version in it’s cache. It also includes a hardened version of the source map so when it’s rebuilding webpack doesn’t need to go and rebuild as much source map work. There’s also other small benefits in that same way that the cached versions are not as flexible as the normal webpack versions and HardSource immediately drops its cache version if webpack needs to do more work with it.

Matt: There are a couple of questions here and I want to share my screen again just to show that minimal config. The other thing I’m going to do is put a link in chat that shows you on github that DuckHunt game is an open source project of mine so you can always reference that, so here’s a link to that. Now to share my screen again. The minimal config, you have to instantiate the HardSource plugin, and you have to give it a cacheDirectory, a place to put its cache data. If it’s not already specified elsewhere you have to give it a records path, and this is what tells webpack how to persist the ids that it assigns to your various modules when it’s running your build. And you have to give it some kind of mechanism for hashing your config to know when to bust the cache and what to name those folders that it’s going to use. The recommended way to do that is using node-object-hash. Just something that hashes a javascript object, and passing it your webpack config. This callback function is expecting to be passed the webpack config. This here is the minimal config I recommend:

new HardSourcePlugin({

cacheDirectory: path.join(__dirname, 'node_modules/.cache/hardsource/[confighash]'),

recordsPath: path.join(__dirname, 'node_modules/.cache/hardsource/[confighash]/records.json'),

configHash: function(webpackConfig) {

return require('node-object-hash')().hash(webpackConfig);

}

})

The documentation in the webpack HardSourcePlugin repo provides multiple things that are available, and identifies the ones that are optional. configHash is marked optional, and it is, but then you’d only have a single cache and things might be screwed up for you (things like dev-server make unseen modifications to your config, which should be cached independently). Even though it’s optional I suggest you provide it all the time. The environment hash, I’ll review again because I think it’s relevant to this question. You really only need to provide the environmentHash if you’re doing something like vendoring dependencies that are not inside node_modules or you’re using yarn or something else where you’d want to trigger your build based on a change to a file that is not in node_modules, not in your vendor directory if you’re using it, and not in package.json. So something that’s totally outside of your project in that way. And again, if you fail to provide this, and you are using vendored dependencies you’re just not going to get the benefit of us caching that, it shouldn’t break anything.

Can invalid cache directories be detected and remove all cache directories that don’t match?

Matt: So theoretically yes. There’s an issue that’s trying to explore the best way to express that in a config parameter which is the challenge right now. That is something that’s manual. You’re responsible for purging your own cache. If you’re not changing your config a lot you probably won’t have a bunch of different cache directories. But it’s something to keep in mind. I do think we’ll solve that. It’s not a problem that’s like “OMG how do we purge the cache” it’s just something we haven’t had a chance to do yet.

Z: I want to add something to that.

Matt: Yeah please do.

Z: The main reason config hash exists – so config hash is an option. You don’t need to use config hash if you’re only ever going to have one configuration with webpack and HardSource. You can drop that, you can drop the configHash from the paths and you’ll have one cachedirectory. That will be busted every time your environment changes through the environment hash. configHash exists for webpack environments that have multiple configurations. A lot of projects will have one webpack config for development, and one for production. Or they are using generated configs. And one of the most common uses I’d really say is people using webpack and webpack dev-server. It’s not super obvious but webpack dev server, when given a configuration makes small changes to it. Specifically it automatically enables the hot replacement plugin and so if you’re using webpack and webpack dev-server with the same configuration you want to use configHash because it’ll give you two cache directories for each of those. Well, it’ll give you one cache directory for each.

Matt: To that point we might be able to answer Jay’s other question here:

How will HardSource save time while running mocha tests each time you make a change on a JS file? Because that’s a huge pain.

Matt: It is. For projects that I’ve been on we try to run a separate webpack config for CI or any kind of testing scenario. To that end we should be able to cache that as a separate cache directory that (configHash) will match differently and as a result generate a different cache directory. So again, you should be able to just run those tests and webpack will know to use those cached files. If you’re somehow writing tests that are not operating on your webpack builds, like if you’re somehow excluding webpack from your test environment then there’s nothing that HardSource can really do. I’m not sure exactly how you might be doing that I guess it would depend on the application.

I’m explicitly excluding buffering crypto from the webpack bundle with Node. My second build with the HardSource plugin seems to fail.

Matt: This is something we’re happy to help debug, put some more data about that on StackOverflow and we can take a little more time to dig into this. I assume it’s failing to resolve the module or something like that. It shouldn’t be doing that since if it can’t resolve the module it should go out to the filesystem and get it.

Can you talk a little more generally about strategies to reduce webpack build time and size?

Matt: Yeah. I think the high level things I’d say is: 1. We want to do another screencast about bundle analysis using webpack’s profiler to look and find what you’re requiring. Theoretically we should be using TreeShaking to like, toss out unneeded exports but that doesn’t always work the way we want it to and we still end up with unnecessary code in our bundles and webpack’s profiler is a great way to identify that, and also identify what is causing a bundle to be so large. So like, my webpack bundle for Duck Hunt is something like 2.6 MB which is pretty big and the reason for that is a lot of lodash dependencies that I’m using and the rendering engine and its associated dependencies. By looking at the bundle analysis you can look at that and go, ok well, this particular thing that I’m bringing in is doing X, Y, Z for me. Maybe there’s a way I can live without it or write a first party implementation that involves less edge cases. A lot of times the dependencies that we bring in like LoDash have really powerful functions that are made to solve for lots of possible edge cases when you call them. If you know you don’t have any of those edge cases you can save a lot of code. That’s not necessarily the best advice depending on what you’re building; since you may run into those edge cases and not know there’s a risk.

Z, do you have any additional advice for reducing build time and size.

Z: I can think of a few things. If you haven’t used it yet there’s a package called HappyPack which is a utility to work with webpack to do loader building in their own node processes. It doesn’t work with every loader and requires much more manual configuration but if you can set that up it can be a pretty nice boost to build time if you have a four core CPU on your machine you can build four times the number of loaders that you’d normally be able to. That also then works well with HardSource because then HardSource still is able to cache. HardSource’s cache caches all the work of all the loaders, but if you also have multiple files changing at the same time you get multiple modules being build at the same time.

Christopher also asks the same question about the DLL plugin. DLL plugins typically query for providing build time and if you want to consider it for size, DLLs can be used for a large number of builds so that users only gather like, a dll that can be a month old but still works and it can be HardCached on their browser. The DLL plugin lets you build a bunch of dependencies, like if you’re using React you can get React and ReactDOM into a single DLL and all of your future builds needing React and ReactDOM will use that DLL giving you a build time improvement and giving the user a caching benefit.

Other size stuff… size stuff is pretty tricky.

Matt: Yea I think what you were saying previously is that it’s not just size of your bundle, it’s how you’re going to chunk it out and give it to your users. So it’s important to manage size but thinking about using DLLs for async chunks or anything that prevents the user from having to take the whole thing on at once. But again depending on your application it depends on whether you can actually do that and have your application function because some stuff, you really do need everything.

Z: Yeah if you’re not using HardSource on size and that caching stuff, the recordsPath which you can use outside of HardSource is a really great option because it helps make your builds more deterministic so you can have one build today and when you make a build tomorrow and have made no changes it will be an identical build. webpack2 does some other things that makes this more likely even without records path. But with records path you get those benefits, you get those benefits with async chunks and you can produce builds that are generally more cachable for users.

Matt: Cool. Well I think we’re about out of time but if you have any questions or problems that you run into while using this. Either open an issue or go to StackOverflow. We’re going to be trying to keep our eyes there for general things like hey this isn’t working with my project. And of course pull requests we welcome suggestions on how to make this better. If you have any thoughts on the cache purging problem we’d love to hear them. Feel free to reach out at anytime [hello@bocoup.com] if you want to find other ways that we could work together. Thanks everyone!

Laura: Hey everyone thanks so much for joining. As Matt mentioned we’re going to be posting and sending out the recording of this Webinar and posting it on YouTube; so yea. Thanks so much for coming and we hope you have a great day!

Tagged in

Comments

We moved off of Disqus for data privacy and consent concerns, and are currently searching for a new commenting tool.